bs4爬虫实战四--获取音悦台榜单

发布时间:2018-07-13 19:31:34编辑:Run阅读(9670)

目标分析:

本次爬虫使用随机proxy和headers抵抗反爬虫机制,来获取音悦台网站公布的MV榜单.

目标网站:http://vchart.yinyuetai.com/vchart/trends

爬虫的目的是爬取音悦台网站公布的MV榜单,点击网站最上方的"V榜",从弹出菜单中选取"MV作品榜"选项,如下图

以内地篇为例:

area=ML 后面的 ML代表内地

TOP1-20的url地址为:http://vchart.yinyuetai.com/vchart/trends?area=ML&page=1

TOP21-40的url地址为:http://vchart.yinyuetai.com/vchart/trends?area=ML&page=2

TOP41-50的url地址为:http://vchart.yinyuetai.com/vchart/trends?area=ML&page=3

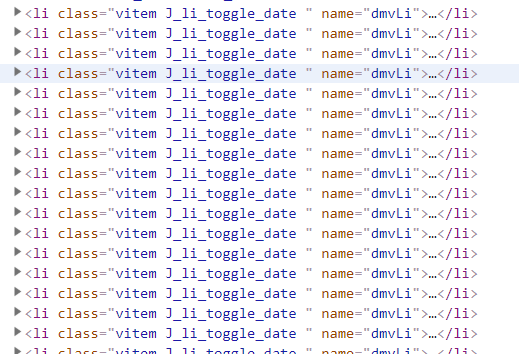

看看其他其他几个地区代码,分别是:HT,US,KR,JP,ALL ,ALL为总榜 ,Urls的规则很明显了,再来看看爬虫的抓取规则,审查源代码,如下图:

每一个li标签就是一首歌的详细信息,爬取的抓取规则也有了

项目实施

创建一个getTrendsMV.py作为主文件,还要使用之前写好的日志模块mylog.py,这里需要使用不同的proxy和headers,再创建一个新的资源文件resource.py文件

resource.py的内容如下:

#!/usr/bin/env python # coding: utf-8 UserAgents = [ "Mozilla/5.0 (Windows NT 6.1; WOW64; rv:6.0) Gecko/20100101 Firefox/6.0", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/534.50 (KHTML, like Gecko) Version/5.1 Safari/534.50", "Opera/9.80 (Windows NT 6.1; U; zh-cn) Presto/2.9.168 Version/11.50", "Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; WOW64; Trident/5.0)", "Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0;", "Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 6.0; Trident/4.0)", "Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0)", "Mozilla/5.0 (Macintosh; Intel Mac OS X 10.6; rv:2.0.1) Gecko/20100101 Firefox/4.0.1", "Mozilla/5.0 (Windows NT 6.1; rv:2.0.1) Gecko/20100101 Firefox/4.0.1", "Opera/9.80 (Macintosh; Intel Mac OS X 10.6.8; U; en) Presto/2.8.131 Version/11.11", "Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Maxthon 2.0)", "Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; TencentTraveler 4.0)", "Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; The World)", "Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; 360SE)", "Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1)", "Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Avant Browser)", ] # 代理ip地址,如果不能使用,去网上找几个免费的使用 # 这里使用的都是http PROXIES = [ "219.141.153.2:80", "219.141.153.11:80", ]

主程序getTrendsMV.py的内容如下:

#!/usr/bin/env python

# coding: utf-8

from bs4 import BeautifulSoup

import urllib.request

import time

from mylog import MyLog as mylog

import resource

import random

class Item(object):

top_num = None # 排名

score = None # 打分

mvname = None # mv名字

singer = None # 演唱者

releasetime = None # 发布时间

class GetMvList(object):

""" the all data from www.yinyuetai.com

所有的数据都来自www.yinyuetai.com

"""

def __init__(self):

self.urlbase = 'http://vchart.yinyuetai.com/vchart/trends?'

self.areasDic = {

'ALL': '总榜',

'ML': '内地篇',

'HT': '港台篇',

'US': '欧美篇',

'KR': '韩国篇',

'JP': '日本篇',

}

self.log = mylog()

self.geturls()

def geturls(self):

# 获取url池

areas = [i for i in self.areasDic.keys()]

pages = [str(i) for i in range(1, 4)]

for area in areas:

urls = []

for page in pages:

urlEnd = 'area=' + area + '&page=' + page

url = self.urlbase + urlEnd

urls.append(url)

self.log.info('添加URL:{}到URLS'.format(url))

self.spider(area, urls)

def getResponseContent(self, url):

"""从页面返回数据"""

fakeHeaders = {"User-Agent": self.getRandomHeaders()}

request = urllib.request.Request(url, headers=fakeHeaders)

proxy = urllib.request.ProxyHandler({'http': 'http://' + self.getRandomProxy()})

opener = urllib.request.build_opener(proxy)

urllib.request.install_opener(opener)

try:

response = urllib.request.urlopen(request)

html = response.read().decode('utf8')

time.sleep(1)

except Exception as e:

self.log.error('Python 返回 URL:{} 数据失败'.format(url))

return ''

else:

self.log.info('Python 返回 URL:{} 数据成功'.format(url))

return html

def getRandomProxy(self):

# 随机选取Proxy代理地址

return random.choice(resource.PROXIES)

def getRandomHeaders(self):

# 随机选取User-Agent头

return random.choice(resource.UserAgents)

def spider(self, area, urls):

items = []

for url in urls:

responseContent = self.getResponseContent(url)

if not responseContent:

continue

soup = BeautifulSoup(responseContent, 'lxml')

tags = soup.find_all('li', attrs={'name': 'dmvLi'})

for tag in tags:

item = Item()

item.top_num = tag.find('div', attrs={'class': 'top_num'}).get_text()

if tag.find('h3', attrs={'class': 'desc_score'}):

item.score = tag.find('h3', attrs={'class': 'desc_score'}).get_text()

else:

item.score = tag.find('h3', attrs={'class': 'asc_score'}).get_text()

item.mvname = tag.find('a', attrs={'class': 'mvname'}).get_text()

item.singer = tag.find('a', attrs={'class': 'special'}).get_text()

item.releasetime = tag.find('p', attrs={'class': 'c9'}).get_text()

items.append(item)

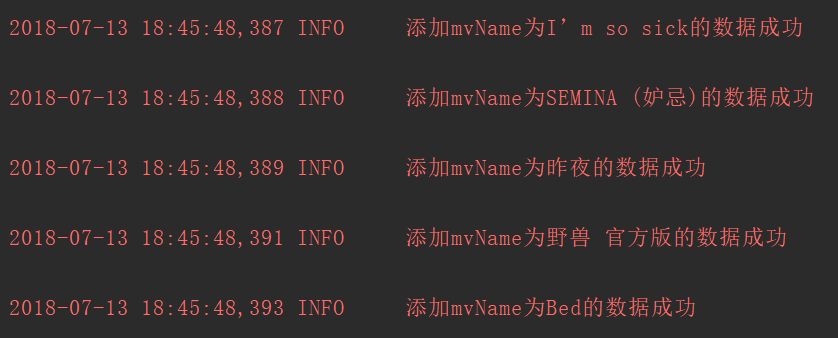

self.log.info('添加mvName为{}的数据成功'.format(item.mvname))

self.pipelines(items, area)

def pipelines(self, items, area):

filename = '音悦台V榜-榜单.txt'

nowtime = time.strftime('%Y-%m-%d %H:%M:S', time.localtime())

with open(filename, 'a', encoding='utf8') as f:

f.write('{} --------- {}\r\n'.format(self.areasDic.get(area), nowtime))

for item in items:

f.write("{} {} \t {} \t {} \t {}\r\n".format(item.top_num,

item.score,

item.releasetime,

item.mvname,

item.singer

))

self.log.info('添加mvname为{}的MV到{}...'.format(item.mvname, filename))

f.write('\r\n'*4)

if __name__ == '__main__':

GetMvList()日志模块mylog.py文件代码如下:

#!/usr/bin/env python

# coding: utf-8

import logging

import getpass

import sys

# 定义MyLog类

class MyLog(object):

def __init__(self):

self.user = getpass.getuser() # 获取用户

self.logger = logging.getLogger(self.user)

self.logger.setLevel(logging.DEBUG)

# 日志文件名

self.logfile = sys.argv[0][0:-3] + '.log' # 动态获取调用文件的名字

self.formatter = logging.Formatter('%(asctime)-12s %(levelname)-8s %(message)-12s\r\n')

# 日志显示到屏幕上并输出到日志文件内

self.logHand = logging.FileHandler(self.logfile, encoding='utf-8')

self.logHand.setFormatter(self.formatter)

self.logHand.setLevel(logging.DEBUG)

self.logHandSt = logging.StreamHandler()

self.logHandSt.setFormatter(self.formatter)

self.logHandSt.setLevel(logging.DEBUG)

self.logger.addHandler(self.logHand)

self.logger.addHandler(self.logHandSt)

# 日志的5个级别对应以下的5个函数

def debug(self, msg):

self.logger.debug(msg)

def info(self, msg):

self.logger.info(msg)

def warn(self, msg):

self.logger.warn(msg)

def error(self, msg):

self.logger.error(msg)

def critical(self, msg):

self.logger.critical(msg)

if __name__ == '__main__':

mylog = MyLog()

mylog.debug(u"I'm debug 中文测试")

mylog.info(u"I'm info 中文测试")

mylog.warn(u"I'm warn 中文测试")

mylog.error(u"I'm error 中文测试")

mylog.critical(u"I'm critical 中文测试")运行主程序getTrendsMV.py

PyCharm部分截图如下:

生成文件音悦台V榜-榜单.txt部分截图如下:

代码分析:

resource.py,资源文件,里面主要存放User-Agent和Proxy的

mylog.py,日志模块,记录一些爬取过程中的信息

getTrendsMV.py 主程序

Item类,这个是仿照Scrapy(爬虫框架)的Item.py写的,作用是定义爬取的内容

GetMvList类,主程序类

__init__方法,定义一些初始化的数据,自动执行了self.geturls函数

self.geturls 获取url池

getResponseContent 从页面返回数据

getRandomProxy 随机选取Proxy代理地址

getRandomHeaders 随机选取User-Agent头

spider 根据爬虫的抓取规则,从返回的数据中抓取所需的数据

pipelines 将所有的数据保存到指定的txt中

Bs4爬虫很强大,它的优点在于可以随心所欲地定制爬虫,缺点就是稍微复杂了一点,需要从头到尾的写代码.

如果是比较小的项目个人建议还是用bs4爬虫,可以有针对性地根据自己的需要编写爬虫.

大项目(效率,去重等等各种),那还是建议选Scrapy吧,Scrapy作为一个python的爬虫框架(bs4是一个模块)并不是浪得虚名的

- openvpn linux客户端使用

52009

- H3C基本命令大全

51842

- openvpn windows客户端使用

42095

- H3C IRF原理及 配置

38944

- Python exit()函数

33450

- openvpn mac客户端使用

30394

- python全系列官方中文文档

29033

- python 获取网卡实时流量

24059

- 1.常用turtle功能函数

23980

- python 获取Linux和Windows硬件信息

22324

- LangChain1.0-Agent部署与上线流程

30°

- LangChain1.0-Agent(进阶)本地模型+Playwright实现网页自动化操作

54°

- LangChain1.0-Agent记忆管理

50°

- LangChain1.0-Agent接入自定义工具与React循环

73°

- LangChain1.0-Agent开发流程

75°

- LangChain1.0调用vllm本地部署qwen模型

103°

- LangChain-1.0入门实践-搭建流式响应的多轮问答机器人

117°

- LangChain-1.0入门实战-1

120°

- LangChain-1.0教程-(介绍,模型接入)

136°

- Ubuntu本地部署dots.ocr

560°

- 姓名:Run

- 职业:谜

- 邮箱:383697894@qq.com

- 定位:上海 · 松江