bs4爬虫实战三:获取电影信息并存入mysql数据库

发布时间:2018-07-12 20:13:03编辑:Run阅读(8348)

目标分析

这次爬虫的目标网站是:http://dianying.2345.com,爬虫的搜索目标仅限于今年的电影,在网站打开搜索,在年代中选择2018

获取页面的过程

在页面的下方单击"下一页",发现URL变成了http://dianying.2345.com/list/----2018---2.html.

测试一下http://dianying.2345.com/list/----2018---1.html,可以正常返回,urls的变化规律找到了,设置一个变量,让这个变量+1,在拼接url地址,就是下一个页面的地址.

在来看看总共有多少页呢?如下图

总页数也找到了,最后只需要找到爬虫的过滤规则就可以了,单击页面空白处,在弹出菜单中选择"查看网页源代码"选项,查看页面源代码,如下图:

直接找<li>标签就可以了,先找<ul>标签,然后再嵌套查找<li>标签就行,更加精确,现在爬虫所有的要素都已准备完毕,可以构造爬虫了

项目实施

新建一个get2018movie.py的内容如下:

#!/usr/bin/env python

# coding: utf-8

from bs4 import BeautifulSoup

import urllib.request

from mylog import MyLog as mylog

from save2mysql import SaveMysql

class MovieItem(object):

moviename = None # 电影名

moviescore = None # 电影得分

moviestarring = None # 电影主演

class GetMovie(object):

def __init__(self):

self.urlBase = 'http://dianying.2345.com/list/----2018---1.html'

self.log = mylog()

self.pages = self.getpages()

self.urls = []

self.items = []

self.getUrls(self.pages)

self.spider(self.urls)

self.piplines(self.items)

self.log.info('保存到mysql中开始')

SaveMysql(self.items)

self.log.info('保存到mysql中结束')

def getpages(self):

# 获取总页数,返回一个int类型给调用者

self.log.info('开始获取页数')

htmlcontent = self.getResponseContent(self.urlBase)

soup = BeautifulSoup(htmlcontent, 'lxml')

tag = soup.find('div', attrs={'class': 'v_page'})

subTags = tag.find_all('a')

self.log.info('获取数据成功')

return int(subTags[-2].get_text())

def getResponseContent(self, url):

# 读取网页内容,并返回给调用者

fakeHeaders = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like

Gecko) Chrome/67.0.3396.62 Safari/537.36'}

request = urllib.request.Request(url, headers=fakeHeaders)

try:

response = urllib.request.urlopen(request)

html = response.read().decode('gbk')

except Exception as e:

self.log.error('Python 返回URL:{} 数据失败'.format(url))

else:

self.log.info('Python 返回URL:{} 数据成功'.format(url))

return html

def getUrls(self, pages):

# 找到所有下一页的url地址,返回一个urls列表

urlHead = 'http://dianying.2345.com/list/----2018---'

urlEnd = '.html'

for i in range(1, pages+1):

url = urlHead + str(i) + urlEnd

self.urls.append(url)

self.log.info('添加URL:{}到URLS列表'.format(url))

def spider(self, urls):

# 过滤信息

for url in urls:

htmlcontent = self.getResponseContent(url)

soup = BeautifulSoup(htmlcontent, 'lxml')

anchorTag = soup.find('ul', attrs={'class': 'v_picTxt pic180_240 clearfix'})

# 爬取的时候,发现有个反爬机制,需要排除掉,不然程序爬到那里会报错

# 找到广告这个li标签

fanpa = anchorTag.find('li', attrs={'class': 'item-gg'})

tags = anchorTag.find_all('li')

if fanpa in tags: # 判断广告在不在需要爬取的列表

tags.remove(fanpa) # 移除掉广告

for tag in tags:

item = MovieItem()

item.moviename = tag.find('span', attrs={'class': 'sTit'}).get_text().strip()

item.moviescore = tag.find('span', attrs={'class':'pRightBottom'}).em.get_text().

strip().replace('分', '')

item.moviestarring = tag.find('span', attrs={'class': 'sDes'}).get_text().strip().

replace('主演:', '')

self.items.append(item)

self.log.info('获取电影名为:《{}》成功'.format(item.moviename))

def piplines(self, items):

# 数据处理

filename = '2018热门电影.txt'

with open(filename, 'w', encoding='utf-8') as f:

for item in items:

f.write("{}\t\t{}\t\t{}\r\n".format(item.moviename, item.moviescore, item.moviestarring))

self.log.info('电影名为:《{}》已成功存入文件{}...'.format(item.moviename, filename))

if __name__ == '__main__':

GM = GetMovie()mylog.py代码:

#!/usr/bin/env python

# coding: utf-8

import logging

import getpass

import sys

# 定义MyLog类

class MyLog(object):

def __init__(self):

self.user = getpass.getuser() # 获取用户

self.logger = logging.getLogger(self.user)

self.logger.setLevel(logging.DEBUG)

# 日志文件名

self.logfile = sys.argv[0][0:-3] + '.log' # 动态获取调用文件的名字

self.formatter = logging.Formatter('%(asctime)-12s %(levelname)-8s %(message)-12s\r\n')

# 日志显示到屏幕上并输出到日志文件内

self.logHand = logging.FileHandler(self.logfile, encoding='utf-8')

self.logHand.setFormatter(self.formatter)

self.logHand.setLevel(logging.DEBUG)

self.logHandSt = logging.StreamHandler()

self.logHandSt.setFormatter(self.formatter)

self.logHandSt.setLevel(logging.DEBUG)

self.logger.addHandler(self.logHand)

self.logger.addHandler(self.logHandSt)

# 日志的5个级别对应以下的5个函数

def debug(self, msg):

self.logger.debug(msg)

def info(self, msg):

self.logger.info(msg)

def warn(self, msg):

self.logger.warn(msg)

def error(self, msg):

self.logger.error(msg)

def critical(self, msg):

self.logger.critical(msg)

if __name__ == '__main__':

mylog = MyLog()

mylog.debug(u"I'm debug 中文测试")

mylog.info(u"I'm info 中文测试")

mylog.warn(u"I'm warn 中文测试")

mylog.error(u"I'm error 中文测试")

mylog.critical(u"I'm critical 中文测试")如果想要存入mysql数据库,需要先把数据库,表和表结构创建好,还需要知道库名,ip地址,端口,账号和密码

mysql上面的操作,准备工作

mysql> create database bs4DB; Query OK, 1 row affected (0.06 sec) mysql> use bs4DB; Database changed mysql> create table this_year_movie(id int auto_increment,moviename char(30),moviescore char(10),moviestarri ng char(40), PRIMARY KEY(id)) ENGINE=InnoDB DEFAULT CHARSET=utf8; Query OK, 0 rows affected (0.61 sec) mysql> desc this_year_movie; +---------------+----------+------+-----+---------+----------------+ | Field | Type | Null | Key | Default | Extra | +---------------+----------+------+-----+---------+----------------+ | id | int(11) | NO | PRI | NULL | auto_increment | | moviename | char(30) | YES | | NULL | | | moviescore | char(10) | YES | | NULL | | | moviestarring | char(40) | YES | | NULL | | +---------------+----------+------+-----+---------+----------------+ 4 rows in set (0.00 sec) mysql> use mysql; Reading table information for completion of table and column names You can turn off this feature to get a quicker startup with -A Database changed mysql> create user 'savemysql'@'%' identified by 'savemysql123'; #创建mysql连接账号密码 Query OK, 0 rows affected (0.12 sec) mysql> grant all privileges on bs4DB.* to "savemysql"@'%'; # 授权savemysql只能操作bs4DB数据库 Query OK, 0 rows affected (0.00 sec) mysql> flush privileges; Query OK, 0 rows affected (0.07 sec)

如果没有安装pymysql,先pip3 install pymysql

新建一个save2mysql.py文件

#!/usr/bin/env python

# coding: utf-8

import pymysql

class SaveMysql(object):

def __init__(self, items):

self.host = '192.168.11.88' # mysql服务器ip地址

self.port = 3306 # mysql服务端的端口

self.user = 'savemysql' # mysql服务器用户名

self.passwd = 'savemysql123' # mysql服务器密码

self.db = 'bs4DB' # 使用的库名

self.run(items)

def run(self, items):

conn = pymysql.connect(host=self.host,

user=self.user,

password=self.passwd,

port=self.port,

database=self.db,

charset='utf8',

)

cur = conn.cursor()

for item in items:

cur.execute("insert into this_year_movie(moviename, moviescore, moviestarring) \

values (%s, %s, %s)", (item.moviename, item.moviescore, item.moviestarring))

cur.close()

conn.commit()

conn.close()

if __name__ == '__main__':

pass运行程序:

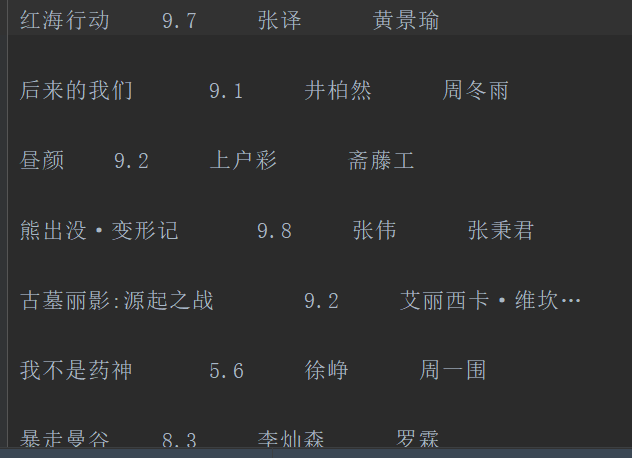

2018热门电影.text截图

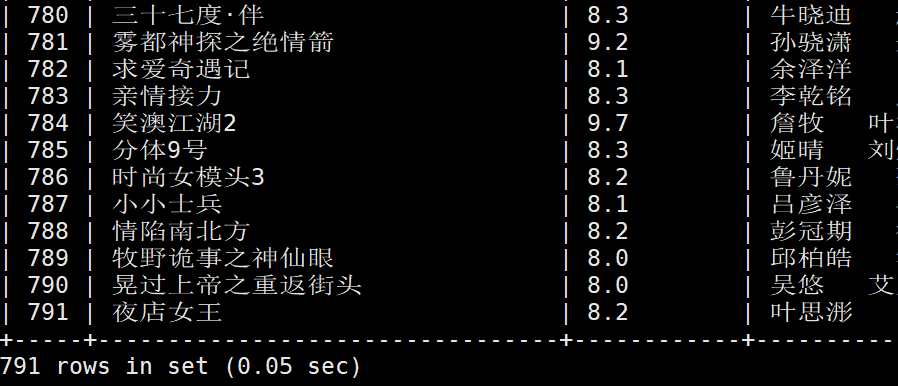

登陆到mysql查看一下数据是否成功插入

mysql> use bs4DB; Reading table information for completion of table and column names You can turn off this feature to get a quicker startup with -A Database changed mysql> show tables; +-----------------+ | Tables_in_bs4DB | +-----------------+ | this_year_movie | +-----------------+ 1 row in set (0.00 sec) mysql> select * from this_year_movie;

查询结果:

- openvpn linux客户端使用

52010

- H3C基本命令大全

51842

- openvpn windows客户端使用

42095

- H3C IRF原理及 配置

38944

- Python exit()函数

33450

- openvpn mac客户端使用

30394

- python全系列官方中文文档

29033

- python 获取网卡实时流量

24059

- 1.常用turtle功能函数

23980

- python 获取Linux和Windows硬件信息

22324

- LangChain1.0-Agent部署与上线流程

30°

- LangChain1.0-Agent(进阶)本地模型+Playwright实现网页自动化操作

54°

- LangChain1.0-Agent记忆管理

50°

- LangChain1.0-Agent接入自定义工具与React循环

73°

- LangChain1.0-Agent开发流程

75°

- LangChain1.0调用vllm本地部署qwen模型

103°

- LangChain-1.0入门实践-搭建流式响应的多轮问答机器人

117°

- LangChain-1.0入门实战-1

120°

- LangChain-1.0教程-(介绍,模型接入)

136°

- Ubuntu本地部署dots.ocr

560°

- 姓名:Run

- 职业:谜

- 邮箱:383697894@qq.com

- 定位:上海 · 松江