Python插入数据到elasticse

发布时间:2019-07-30 10:28:30编辑:auto阅读(1994)

将一个文件中的内容逐条写入elasticsearch中,效率没有写hadoop高,跟kafka更没得比import time

from elasticsearch import Elasticsearch

from collections import OrderedDict

start_time = time.time()

es = Elasticsearch(['localhost:9200'])

temp_list = []

with open('log.out','r',encoding='utf-8')as f:

data_list = f.readlines()

for data in data_list:

temp = OrderedDict()

temp['ServerIp'] = data.split('|')[0]

temp['SpiderType'] = data.split('|')[1]

temp['Level'] = data.split('|')[2]

temp['Date'] = data.split('|')[3]

temp['Type'] = data.split('|')[4]

temp['OffSet'] = data.split('|')[5]

temp['DockerId'] = data.split('|')[6]

temp['WebSiteId'] = data.split('|')[7]

temp['Url'] = data.split('|')[8]

temp['DateStamp'] = data.split('|')[9]

temp['NaviGationId'] = data.split('|')[10]

temp['ParentWebSiteId'] = data.split('|')[11]

temp['TargetUrlNum'] = data.split('|')[12]

temp['Timeconsume'] = data.split('|')[13]

temp['Success'] = data.split('|')[14]

temp['Msg'] = data.split('|')[15]

temp['Extend1'] = data.split('|')[16]

temp['Extend2'] = data.split('|')[17]

temp['Extend3'] = data.split('|')[18]

# temp_list.append(temp)

body = {'ServerIp': temp['ServerIp'],

'SpiderType': temp['SpiderType'],

'Level': temp['Level'],

'Date': temp['Date'],

'Type': temp['Type'],

'OffSet': temp['OffSet'],

'DockerId': temp['DockerId'],

'WebSiteId': temp['WebSiteId'],

'Url': temp['Url'],

'DateStamp': temp['DateStamp'],

'NaviGationId': temp['NaviGationId'],

'ParentWebSiteId': temp['ParentWebSiteId'],

'TargetUrlNum': temp['TargetUrlNum'],

'Timeconsume': temp['Timeconsume'],

'Success': temp['Success'],

'Msg': temp['Msg'],

'Extend1': temp['Extend1'],

'Extend2': temp['Extend2'],

'Extend3': temp['Extend3'],

}

es.index(index='shangjispider', doc_type='spider', body=body, id=None)

end_time = time.time()

t = end_time - start_time

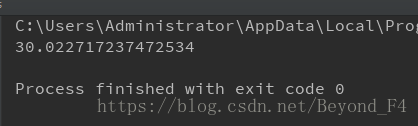

print(t)

不得不说,这样搞,效率真的不高,插入287条用了30s,根本没法投入生产,在想别的办法

-----------------------------------------------------------------------------------------------------------------

又搞了半天,发现了一个新的方法,效率陡增啊,老铁,有木有!!!!

=》

==》

===》

====》

=====》

======》

-----------------------------------------------擦亮你的双眼---------------------------------------------------------

import time

from elasticsearch import Elasticsearch

from elasticsearch import helpers

start_time = time.time()

es = Elasticsearch()

actions = []

f = open('log.out', 'r', encoding='utf-8')

data_list = f.readlines()

i = 0

for data in data_list:

line = data.split('|')

action = {

"_index": "haizhen",

"_type": "imagetable",

"_id": i,

"_source": {

'ServerIp': line[0],

'SpiderType': line[1],

'Level': line[2],

'Date': line[3],

'Type': line[4],

'OffSet': line[5],

'DockerId': line[6],

'WebSiteId': line[7],

'Url': line[8],

'DateStamp': line[9],

'NaviGationId': line[10],

'ParentWebSiteId': line[11],

'TargetUrlNum': line[12],

'Timeconsume': line[13],

'Success': line[14],

'Msg': line[15],

'Extend1': line[16],

'Extend2': line[17],

'Extend3': line[18],

}

}

i += 1

actions.append(action)

if len(action) == 1000:

helpers.bulk(es, actions)

del actions[0:len(action)]

if i > 0:

helpers.bulk(es, actions)

end_time = time.time()

t = end_time - start_time

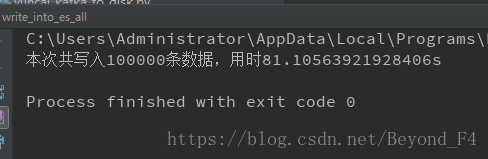

print('本次共写入{}条数据,用时{}s'.format(i, t))见证奇迹的时刻

这效率杠杠滴呀,反正是够我用了,先这样吧,需要提升了再想办法吧

老铁,记得给我点赞喏!!!!

上一篇: Python3 Urllib库的基本使用

下一篇: python命名空间

- openvpn linux客户端使用

51966

- H3C基本命令大全

51768

- openvpn windows客户端使用

42051

- H3C IRF原理及 配置

38894

- Python exit()函数

33389

- openvpn mac客户端使用

30353

- python全系列官方中文文档

28994

- python 获取网卡实时流量

24012

- 1.常用turtle功能函数

23926

- python 获取Linux和Windows硬件信息

22285

- Ubuntu本地部署dots.ocr

477°

- Python搭建一个RAG系统(分片/检索/召回/重排序/生成)

2650°

- Browser-use:智能浏览器自动化(Web-Agent)

3347°

- 使用 LangChain 实现本地 Agent

2766°

- 使用 LangChain 构建本地 RAG 应用

2756°

- 使用LLaMA-Factory微调大模型的function calling能力

3532°

- 复现一个简单Agent系统

2718°

- LLaMA Factory-Lora微调实现声控语音多轮问答对话-1

3547°

- LLaMA Factory微调后的模型合并导出和部署-4

5847°

- LLaMA Factory微调模型的各种参数怎么设置-3

5633°

- 姓名:Run

- 职业:谜

- 邮箱:383697894@qq.com

- 定位:上海 · 松江