LangChain1.0调用vllm本地部署qwen模型

发布时间:2026-01-29 23:43:34编辑:123阅读(255)

环境介绍:

NVIDIA GeForce RTX 4070

Driver Version: 572.60

CUDA Version: 12.8

命令:

conda create --name vllm_qwen python=3.10

conda activate vllm_qwen

pip config set global.index-url https://mirrors.aliyun.com/pypi/simple/

pip install vllm openai --upgrade

模型下载:

git clone https://modelscope.cn/models/Qwen/Qwen2.5-0.5B-Instruct ./models

vllm兼容openai-api启动

python -m vllm.entrypoints.openai.api_server \

--model /home/sam_admin/vllm_qwen/models \

--host 0.0.0.0 \

--port 8010 \

--trust-remote-code \

--gpu-memory-utilization 0.8 \

--served-model-name Qwen2.5-0.5B-Instruct

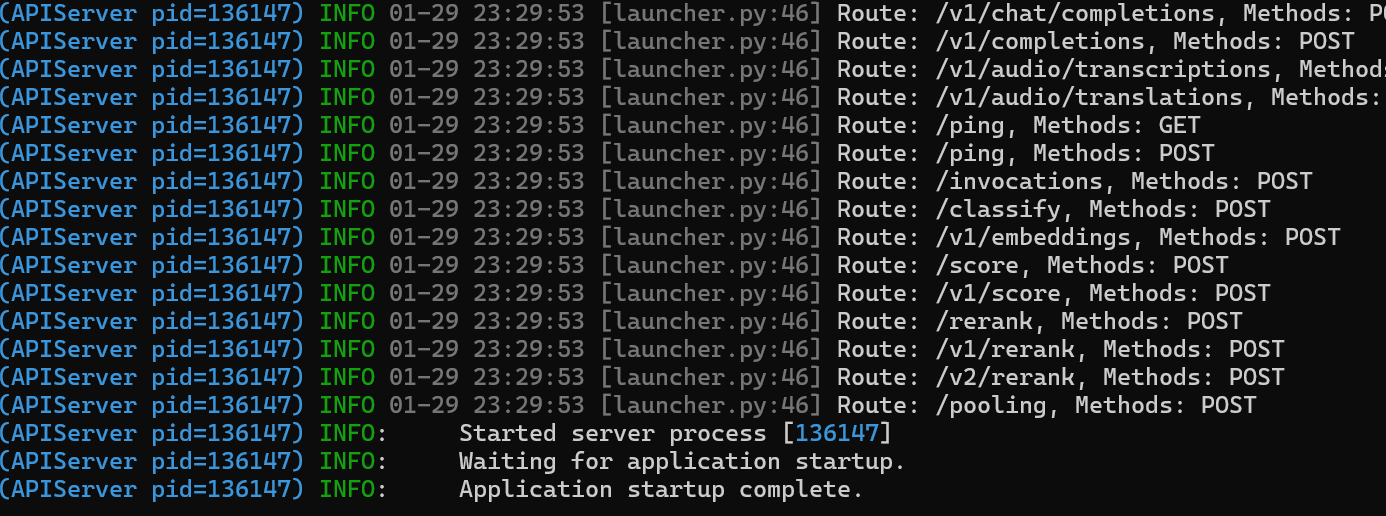

正常启动显示如下:

测试API服务器是否正常可用,获取所有模型

http://192.168.5.132:8010/v1/models

{

"object": "list",

"data": [

{

"id": "Qwen2.5-0.5B-Instruct",

"object": "model",

"created": 1769700690,

"owned_by": "vllm",

"root": "/home/sam_admin/vllm_qwen/models",

"parent": null,

"max_model_len": 32768,

"permission": [

{

"id": "modelperm-9de455f806e74752",

"object": "model_permission",

"created": 1769700690,

"allow_create_engine": false,

"allow_sampling": true,

"allow_logprobs": true,

"allow_search_indices": false,

"allow_view": true,

"allow_fine_tuning": false,

"organization": "*",

"group": null,

"is_blocking": false

}

]

}

]

}

测试openai-api是否兼容

from openai import OpenAI

client = OpenAI(

api_key="dummy_key", # vLLM OpenAI 服务无需真实 API Key,填任意非空字符串即可

base_url="http://192.168.5.132:8010/v1" # 核心:指向 vLLM 的 OpenAI 兼容接口前缀 /v1

)

# 调用 chat/completions 接口(与 OpenAI 用法完全一致)

response = client.chat.completions.create(

model="Qwen2.5-0.5B-Instruct", # 模型名称需与启动时的 --model 参数一致

messages=[

{"role": "system", "content": "你是一个乐于助人的助手,回答简洁明了。"},

{"role": "user", "content": "你是谁"}

],

temperature=0.7, # 生成温度,控制随机性

max_tokens=512, # 最大生成 token 数

stream=False # 关闭流式输出,返回完整结果

)

print(response.choices[0].message.content)运行结果:

我是来自阿里云的大规模语言模型,叫通义千问。

测试langchain调用

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(

base_url="http://192.168.5.132:8010/v1", # vLLM API 地址

api_key="token-abc123", # 如果 vLLM 启用了 API key

model="Qwen2.5-0.5B-Instruct", # 必须与 vLLM 的 --served-model-name 一致

temperature=0.7,

max_tokens=1024,

timeout=60

)

# langchain 批处理方式请求

responses = llm.batch([

"介绍下你自己",

"请问什么是机器学习",

"你知道机器学习和深度学习区别吗"

]

)

for response in responses:

print(response)

print("="*50)

# langchain 并发请求

list_of_inputs = [

"介绍下你自己",

"请问什么是机器学习",

"你知道机器学习和深度学习区别吗"

]

rows=llm.batch(

list_of_inputs,

config={

'max_concurrency': 5, # 最多5个并发

}

)

for row in rows:

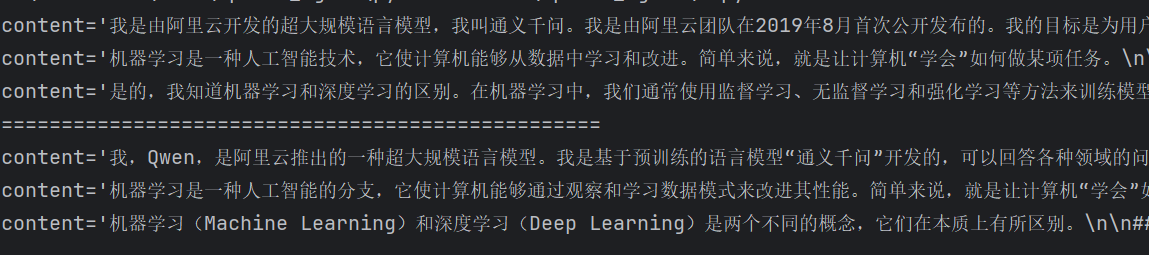

print(row)运行结果:

- openvpn linux客户端使用

52115

- H3C基本命令大全

51995

- openvpn windows客户端使用

42206

- H3C IRF原理及 配置

39047

- Python exit()函数

33559

- openvpn mac客户端使用

30515

- python全系列官方中文文档

29153

- python 获取网卡实时流量

24176

- 1.常用turtle功能函数

24080

- python 获取Linux和Windows硬件信息

22416

- LangChain1.0-Agent-部署/上线(开发人员必备)

206°

- LangChain1.0-Agent-Spider实战(爬虫函数替代API接口)

227°

- LangChain1.0-Agent(进阶)本地模型+Playwright实现网页自动化操作

232°

- LangChain1.0-Agent记忆管理

211°

- LangChain1.0-Agent接入自定义工具与React循环

241°

- LangChain1.0-Agent开发流程

230°

- LangChain1.0调用vllm本地部署qwen模型

255°

- LangChain-1.0入门实践-搭建流式响应的多轮问答机器人

266°

- LangChain-1.0入门实战-1

271°

- LangChain-1.0教程-(介绍,模型接入)

277°

- 姓名:Run

- 职业:谜

- 邮箱:383697894@qq.com

- 定位:上海 · 松江