Selenium&PhantomJS实战一:获取代理ip

发布时间:2018-07-25 08:24:53编辑:Run阅读(8460)

用Selenium&PhantomJS完成的网络爬虫,最适合使用的情形是爬取有JavaScript的网站,用来爬其他的站点也一样给力

准备环境

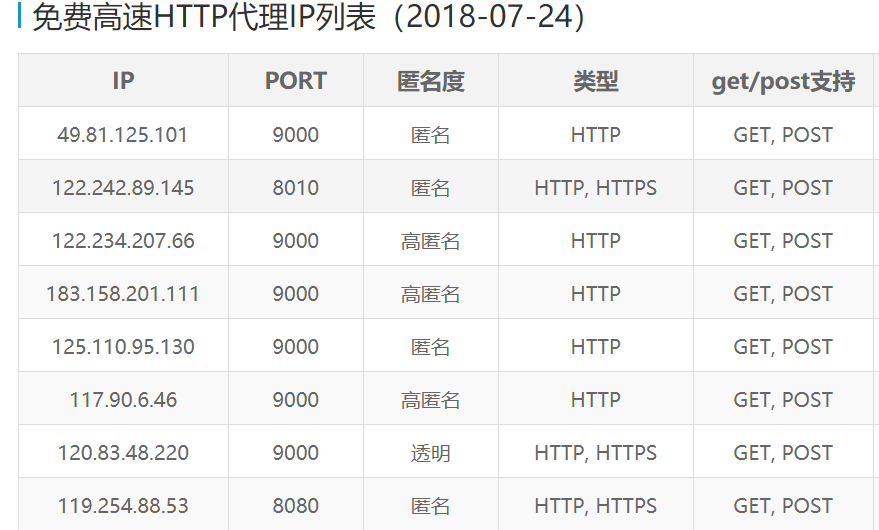

将在https://www.kuaidaili.com/ops/proxylist/1/中获取已经验证好了的代理服务器,打开目标网站

目标分析:

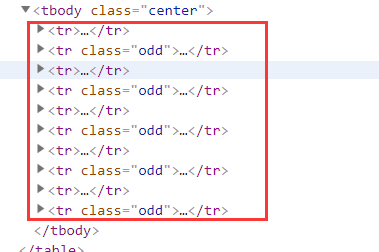

所有的代理信息都在tr标签,或者tr class='odd'里面

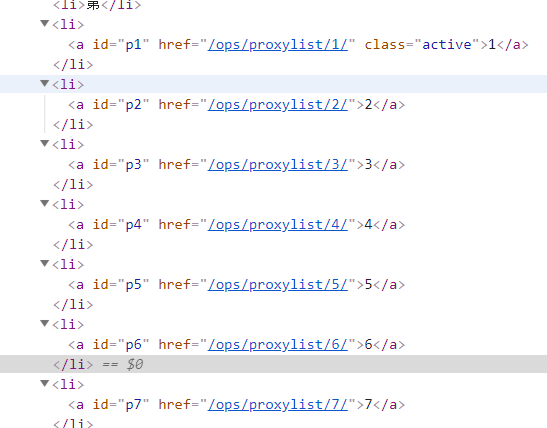

再来分析下一页的地址:后面跟着的数字代表第几页

项目实施:

在目录下创建一个getProxyFromDaili.py文件,代码如下:

#!/usr/bin/env python

# coding: utf-8

from selenium import webdriver

from mylog import MyLog as mylog

class Item(object):

ip = None # 代理ip

port = None # 代理端口

anonymous = None # 是否匿名

type = None # 类型

support = None # 支持的协议

position = None # 位置

responsive_speed = None # 响应速度

final_verification_time = None # 最后验证时间

class GetProxy(object):

def __init__(self):

self.startUrl = 'https://www.kuaidaili.com/ops/proxylist/'

self.log = mylog()

self.urls = self.getUrls()

self.filename = 'proxy.txt'

self.getProxyList(self.urls)

def getUrls(self):

urls = []

for i in range(1, 11):

url = self.startUrl + str(i)

urls.append(url)

self.log.info("添加url:{}到urls列表".format(url))

return urls

def getProxyList(self, urls):

item = Item()

browser = webdriver.PhantomJS()

for url in urls:

browser.get(url)

browser.implicitly_wait(5)

elements = browser.find_elements_by_xpath('//div[@id="freelist"]//tbody[@class="center"]/tr')

for element in elements:

item.ip = element.find_element_by_xpath('./td[1]').text

item.port = element.find_element_by_xpath('./td[2]').text

item.anonymous = element.find_element_by_xpath('./td[3]').text

item.type = element.find_element_by_xpath('./td[4]').text

item.support = element.find_element_by_xpath('./td[5]').text

item.position = element.find_element_by_xpath('./td[6]').text

item.responsive_speed = element.find_element_by_xpath('./td[7]').text

item.final_verification_time = element.find_element_by_xpath('./td[8]').text

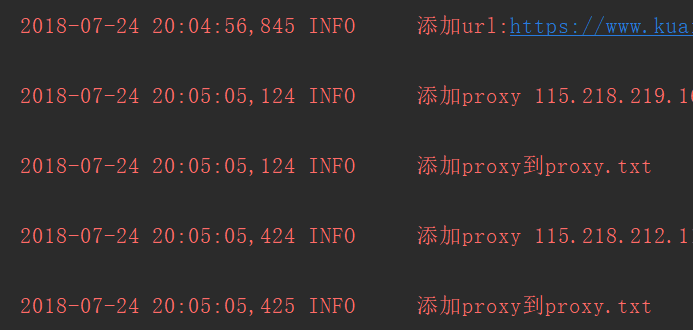

self.log.info('添加proxy {}:{} 到proxyList'.format(item.ip, item.port))

self.log.info('添加proxy到{}'.format(self.filename))

with open(self.filename, 'a', encoding='utf8') as fp:

fp.write("{}\t{}\t{}\t{}\t{}\t{}\t{}\t{}\r\n".format(

item.ip,

item.port,

item.anonymous,

item.type,

item.support,

item.position,

item.responsive_speed,

item.final_verification_time

))

browser.quit()

if __name__ == '__main__':

GP = GetProxy()创建mylog.py文件,代码如下:

#!/usr/bin/env python

# coding: utf-8

import logging

import getpass

import sys

# 定义MyLog类

class MyLog(object):

def __init__(self):

self.user = getpass.getuser() # 获取用户

self.logger = logging.getLogger(self.user)

self.logger.setLevel(logging.DEBUG)

# 日志文件名

self.logfile = sys.argv[0][0:-3] + '.log' # 动态获取调用文件的名字

self.formatter = logging.Formatter('%(asctime)-12s %(levelname)-8s %(message)-12s\r\n')

# 日志显示到屏幕上并输出到日志文件内

self.logHand = logging.FileHandler(self.logfile, encoding='utf-8')

self.logHand.setFormatter(self.formatter)

self.logHand.setLevel(logging.DEBUG)

self.logHandSt = logging.StreamHandler()

self.logHandSt.setFormatter(self.formatter)

self.logHandSt.setLevel(logging.DEBUG)

self.logger.addHandler(self.logHand)

self.logger.addHandler(self.logHandSt)

# 日志的5个级别对应以下的5个函数

def debug(self, msg):

self.logger.debug(msg)

def info(self, msg):

self.logger.info(msg)

def warn(self, msg):

self.logger.warn(msg)

def error(self, msg):

self.logger.error(msg)

def critical(self, msg):

self.logger.critical(msg)

if __name__ == '__main__':

mylog = MyLog()

mylog.debug(u"I'm debug 中文测试")

mylog.info(u"I'm info 中文测试")

mylog.warn(u"I'm warn 中文测试")

mylog.error(u"I'm error 中文测试")

mylog.critical(u"I'm critical 中文测试")pycharm运行截图

proxy.txt文件截图

- openvpn linux客户端使用

52029

- H3C基本命令大全

51887

- openvpn windows客户端使用

42123

- H3C IRF原理及 配置

38969

- Python exit()函数

33468

- openvpn mac客户端使用

30421

- python全系列官方中文文档

29051

- python 获取网卡实时流量

24081

- 1.常用turtle功能函数

23997

- python 获取Linux和Windows硬件信息

22344

- LangChain1.0-Agent-部署/上线(开发人员必备)

49°

- LangChain1.0-Agent-Spider实战(爬虫函数替代API接口)

95°

- LangChain1.0-Agent(进阶)本地模型+Playwright实现网页自动化操作

122°

- LangChain1.0-Agent记忆管理

111°

- LangChain1.0-Agent接入自定义工具与React循环

128°

- LangChain1.0-Agent开发流程

116°

- LangChain1.0调用vllm本地部署qwen模型

145°

- LangChain-1.0入门实践-搭建流式响应的多轮问答机器人

163°

- LangChain-1.0入门实战-1

162°

- LangChain-1.0教程-(介绍,模型接入)

167°

- 姓名:Run

- 职业:谜

- 邮箱:383697894@qq.com

- 定位:上海 · 松江