下面分享个scrapy的例子

利用scrapy爬取HBS 船公司柜号信息

1、前期准备

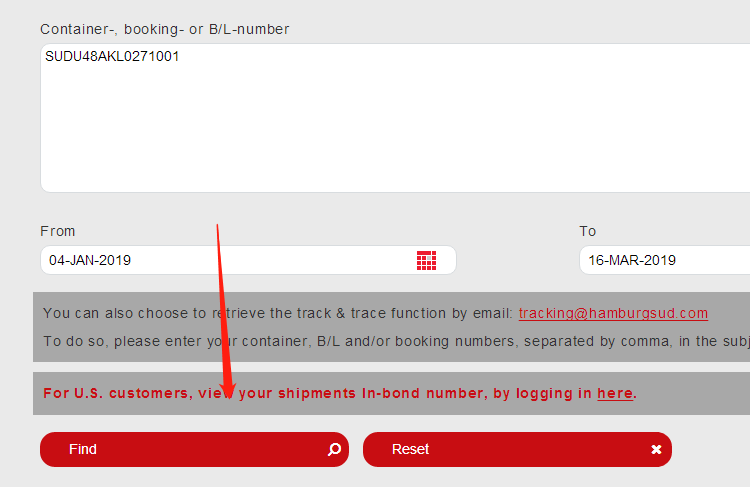

查询提单号下的柜号有哪些,主要是在下面的网站上,输入提单号,然后点击查询

https://www.hamburgsud-line.com/liner/en/liner_services/ecommerce/track_trace/index.html

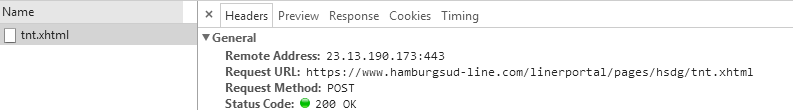

通过浏览器的network,我们可以看到,请求的是如下的网址

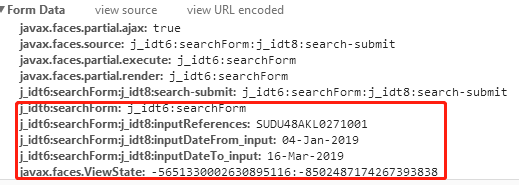

请求的参数如下,可以看到其中一些参数是固定的,一些是变化的(下图红框中的数据),而这些变化的参数大部分是在页面上,我们可以先请求一下这个页面,获取其中提交的参数,然后再提交

2编写爬虫

2.1首先,我们请求一下这个页面,然后获取其中的一些变化的参数,把获取到的参数组合起来

# -*- coding: utf-8 -*-

import scrapy

from scrapy.http import Request, FormRequest

class HbsSpider(scrapy.Spider):

name = "hbs"

allowed_domains = ["www.hamburgsud-line.com"]

def start_requests(self):

yield Request(self.post_url, callback=self.post)

def post(self, response):

sel = response.css('input')

keys = sel.xpath('./@name').extract()

values = sel.xpath('./@value').extract()

inputData = dict(zip(keys, values))

2.2 再次请求数据

1、把固定不变的参数和页面获取到的参数一起提交

2、再把header伪装一下

post_url = 'https://www.hamburgsud-line.com/linerportal/pages/hsdg/tnt.xhtml' def post(self, response): sel = response.css('input') keys = sel.xpath('./@name').extract() values = sel.xpath('./@value').extract() inputData = dict(zip(keys, values)) # 提交页面的解析函数,构造FormRequest对象提交表单 fd = {'javax.faces.partial.ajax': 'true', 'javax.faces.source': 'j_idt6:searchForm:j_idt8:search-submit', 'javax.faces.partial.execute': 'j_idt6:searchForm', 'javax.faces.partial.render': 'j_idt6:searchForm', 'j_idt6:searchForm:j_idt8:search-submit': 'j_idt6:searchForm:j_idt8:search-submit', # 'j_idt6:searchForm': 'j_idt6:searchForm', 'j_idt6:searchForm:j_idt8:inputReferences': self.blNo, # 'j_idt6:searchForm:j_idt8:inputDateFrom_input': '04-Jan-2019', # 'j_idt6:searchForm:j_idt8:inputDateTo_input': '16-Mar-2019', # 'javax.faces.ViewState': '-2735644008488912659:3520516384583764336' } fd.update(inputData) headers = { ':authority': 'www.hamburgsud-line.com', ':method': 'POST', ':path': '/linerportal/pages/hsdg/tnt.xhtml', ':scheme':'https', # 'accept': 'application/xml,text/xml,*/*;q=0.01', # 'accept-language':'zh-CN,zh;q=0.8', 'content-type':'application/x-www-form-urlencoded; charset=UTF-8', 'faces-request': 'partial/ajax', 'origin':'https://www.hamburgsud-line.com', 'referer':'https://www.hamburgsud-line.com/linerportal/pages/hsdg/tnt.xhtml', 'user-agent':'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/55.0.2883.87 Safari/537.36', 'x-requested-with':'XMLHttpRequest' } yield FormRequest.from_response(response, formdata=fd,callback=self.parse_post,headers=headers)

3、解析数据

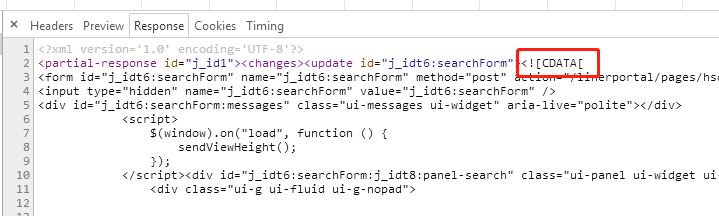

3.1我们可以看到返回的数据是在XML的CDATA下,第一步,我们从中先把这个form获取出来,

def parse_post(self, response): # 提交成功后,继续爬取start_urls 中的页面 text = response.text; xml_data = minidom.parseString(text).getElementsByTagName('update') if len(xml_data) > 0: # form = xml_data[0].textContent form = xml_data[0].firstChild.wholeText

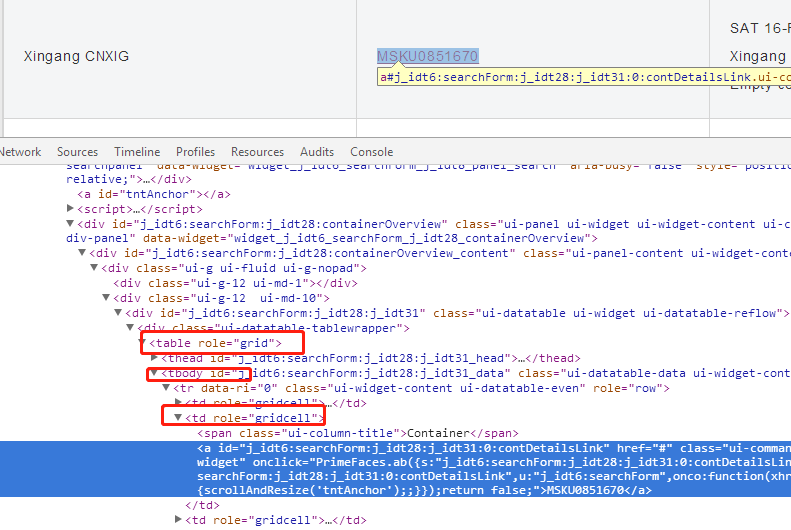

3.2我们定位到柜的元素里面,因为经常一个提单下会有很多柜,如果直接用网站自动生成的id号去查找,后面用其他的提单号去爬取的时候,解析可能就有问题了

所以我们不用id去定位,改为其他方式

selector = Selector(text=form) trs = selector.css("table[role=grid] tbody tr") for i in range(len(trs)): print(trs[i]) td = trs[i].css("td:nth-child(2)>a::text") yield { 'containerNo' : td.extract() }

4、运行

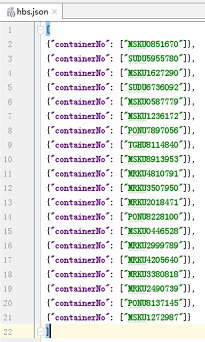

>scrapy crawl hbs -o hbs.json

可以看到,爬取到的数据如下

PS:记得把设置里面的ROBOT协议改成False,否则可能失败

ROBOTSTXT_OBEY = False

5.代码

# -*- coding: utf-8 -*- import scrapy from scrapy.http import Request, FormRequest from xml.dom import minidom from scrapy.selector import Selector class HbsSpider(scrapy.Spider): name = "hbs" allowed_domains = ["www.hamburgsud-line.com"] #start_urls = ['https://www.hamburgsud-line.com/linerportal/pages/hsdg/tnt.xhtml'] def __init__(self, blNo='SUDU48AKL0271001', *args, **kwargs): self.blNo = blNo # ----------------------------提交--------------------------------- # 提交页面的url post_url = 'https://www.hamburgsud-line.com/linerportal/pages/hsdg/tnt.xhtml' def start_requests(self): yield Request(self.post_url, callback=self.post) def post(self, response): sel = response.css('input') keys = sel.xpath('./@name').extract() values = sel.xpath('./@value').extract() inputData = dict(zip(keys, values)) # 提交页面的解析函数,构造FormRequest对象提交表单 if "j_idt6:searchForm" in inputData: fd = {'javax.faces.partial.ajax': 'true', 'javax.faces.source': 'j_idt6:searchForm:j_idt8:search-submit', 'javax.faces.partial.execute': 'j_idt6:searchForm', 'javax.faces.partial.render': 'j_idt6:searchForm', 'j_idt6:searchForm:j_idt8:search-submit': 'j_idt6:searchForm:j_idt8:search-submit', # 'j_idt6:searchForm': 'j_idt6:searchForm', 'j_idt6:searchForm:j_idt8:inputReferences': self.blNo, # 'j_idt6:searchForm:j_idt8:inputDateFrom_input': '04-Jan-2019', # 'j_idt6:searchForm:j_idt8:inputDateTo_input': '16-Mar-2019', # 'javax.faces.ViewState': '-2735644008488912659:3520516384583764336' } else: fd = {'javax.faces.partial.ajax': 'true', 'javax.faces.source': 'j_idt7:searchForm: j_idt9:search - submit', 'javax.faces.partial.execute': 'j_idt7:searchForm', 'javax.faces.partial.render': 'j_idt7:searchForm', 'j_idt7:searchForm:j_idt9:search-submit': 'j_idt7:searchForm:j_idt9:search-submit', # 'javax.faces.ViewState:': '-1349351850393148019:-4619609355387768827', # 'j_idt7:searchForm:': 'j_idt7:searchForm', # 'j_idt7:searchForm:j_idt9:inputDateFrom_input':'13-Dec-2018', # 'j_idt7:searchForm:j_idt9:inputDateTo_input':'22-Feb-2019', 'j_idt7:searchForm:j_idt9:inputReferences': self.blNo } fd.update(inputData) headers = { ':authority': 'www.hamburgsud-line.com', ':method': 'POST', ':path': '/linerportal/pages/hsdg/tnt.xhtml', ':scheme':'https', # 'accept': 'application/xml,text/xml,*/*;q=0.01', # 'accept-language':'zh-CN,zh;q=0.8', 'content-type':'application/x-www-form-urlencoded; charset=UTF-8', 'faces-request': 'partial/ajax', 'origin':'https://www.hamburgsud-line.com', 'referer':'https://www.hamburgsud-line.com/linerportal/pages/hsdg/tnt.xhtml', 'user-agent':'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/55.0.2883.87 Safari/537.36', 'x-requested-with':'XMLHttpRequest' } yield FormRequest.from_response(response, formdata=fd,callback=self.parse_post,headers=headers) def parse_post(self, response): # 提交成功后,继续爬取start_urls 中的页面 text = response.text; xml_data = minidom.parseString(text).getElementsByTagName('update') if len(xml_data) > 0: # form = xml_data[0].textContent form = xml_data[0].firstChild.wholeText selector = Selector(text=form) trs = selector.css("table[role=grid] tbody tr") for i in range(len(trs)): print(trs[i]) td = trs[i].css("td:nth-child(2)>a::text") yield { 'containerNo' : td.extract() }