requests项目实战--抓取猫眼电影排行

发布时间:2019-05-06 00:25:44编辑:Run阅读(6205)

requests项目实战--抓取猫眼电影排行

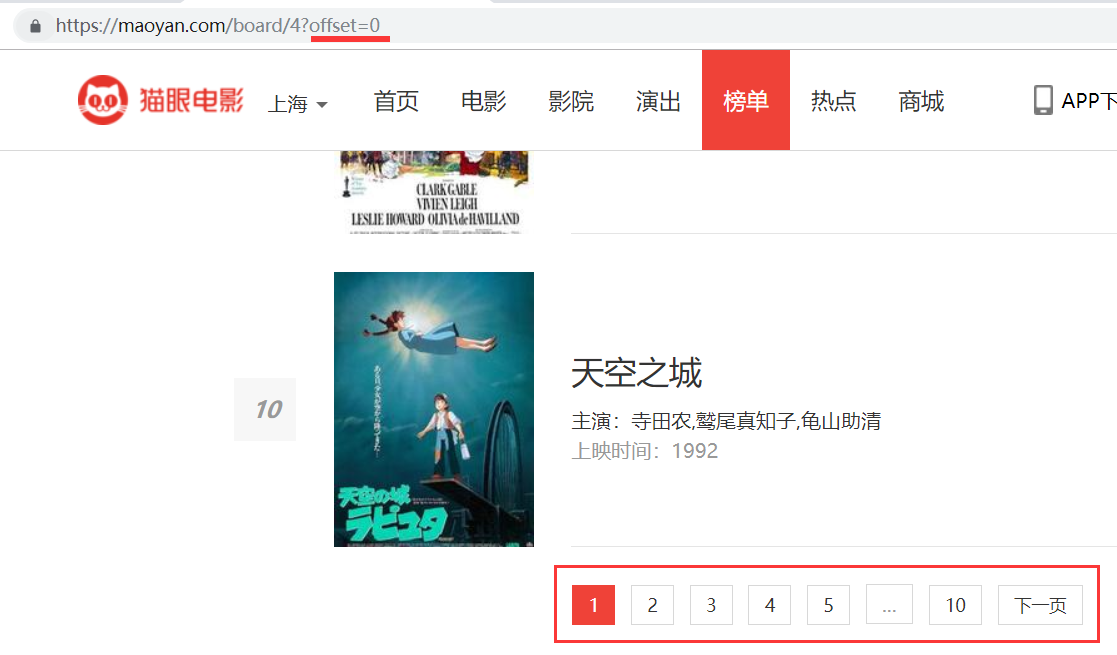

目标 url : https://maoyan.com/board/4?offset=0

提取出猫眼电影TOP100的电影名称,主演,上映时间,评分,图片等信息,提取的结果以文本的形式保存起来。

环境:安装requests库,lxml--xpath解析

pip3 install requests

pip3 install lxml

抓取分析:

offset为偏移量,一共10页,每页10部电影,offset=90为最后一页,offset每次+=10则是下一页的url地址。

xpath内容提取:

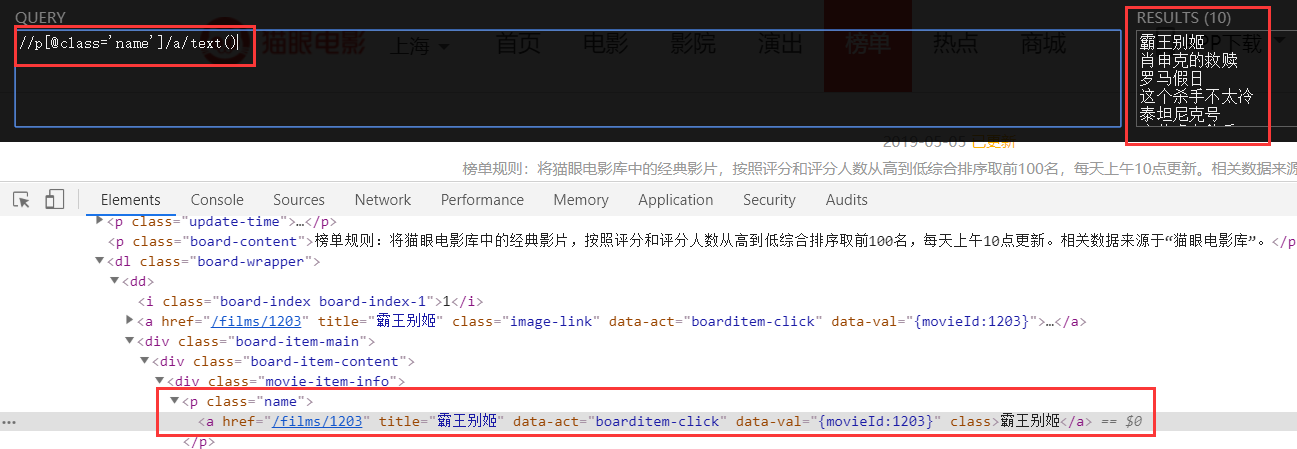

获取每一页的所有电影名:

//p[@class='name']/a/text()

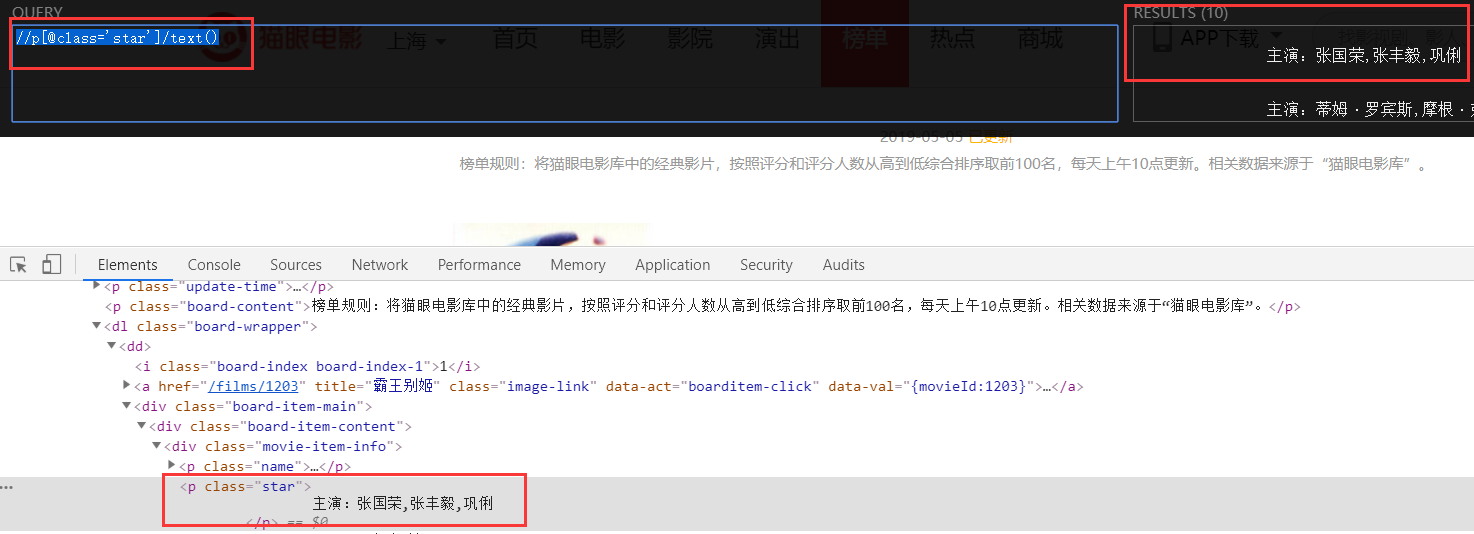

获取每一页所有的主演名:

//p[@class='star']/text()

获取每一页的所有电影上映时间:

//p[@class='releasetime']/text()

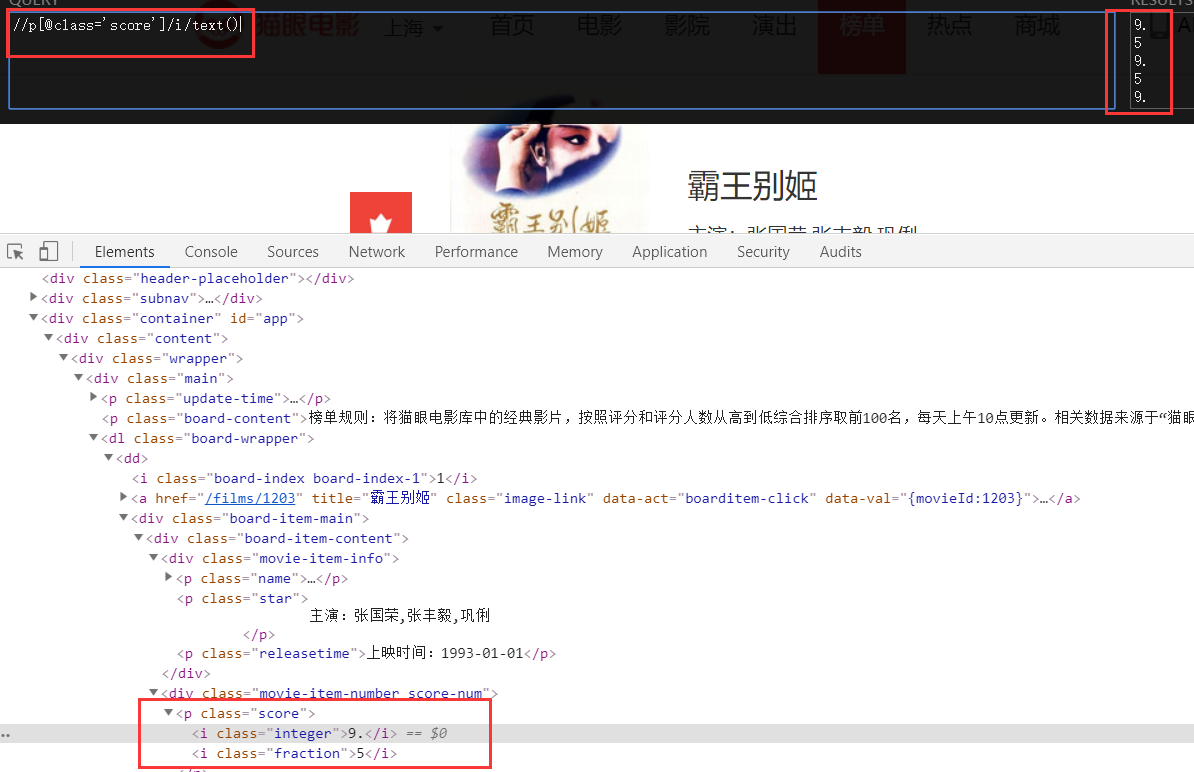

获取每一页所有的电影评分

//p[@class='score']/i/text()

获取每一页所有电影图片url地址

//img[@class='board-img']/@src

完整代码:

#!/usr/bin/env python

# coding: utf-8

import requests

from lxml import etree

import time

import json

class Item:

movie_name = None # 电影名

to_star = None # 主演

release_time = None # 上映时间

score = None # 评分

picture_address = None # 图片地址

class GetMaoYan:

def get_html(self, url):

try:

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.103 Safari/537.36'

}

response = requests.get(url=url, headers=headers)

if response.status_code == 200:

return response.text

return None

except Exception:

return None

def get_content(self, html):

items = []

# normalize-space 去空格,换行符

content = etree.HTML(html)

all_list = content.xpath("//dl[@class='board-wrapper']/dd")

for i in all_list:

item = Item()

item.movie_name = i.xpath("normalize-space(.//p[@class='name']/a/text())")

item.to_star = i.xpath("normalize-space(.//p[@class='star']/text())")

item.release_time = i.xpath("normalize-space(.//p[@class='releasetime']/text())")

x, y = i.xpath(".//p[@class='score']/i/text()")

item.score = x + y

item.picture_address = i.xpath("normalize-space(./a/img[@class='board-img']/@data-src)")

items.append(item)

return items

def write_to_txt(self, items):

content_dict = {

'movie_name': None,

'to_star': None,

'release_time': None,

'score': None,

'picture_address': None

}

with open('result.txt', 'a', encoding='utf-8') as f:

for item in items:

content_dict['movie_name'] = item.movie_name

content_dict['to_star'] = item.to_star

content_dict['release_time'] = item.release_time

content_dict['score'] = item.score

content_dict['picture_address'] = item.picture_address

print(content_dict)

f.write(json.dumps(content_dict, ensure_ascii=False) + '\n')

def main(self, offset):

url = 'https://maoyan.com/board/4?offset=' + str(offset)

html = self.get_html(url)

items = self.get_content(html)

self.write_to_txt(items)

if __name__ == '__main__':

st = GetMaoYan()

for i in range(10):

st.main(offset=i*10)

time.sleep(1)运行结果:

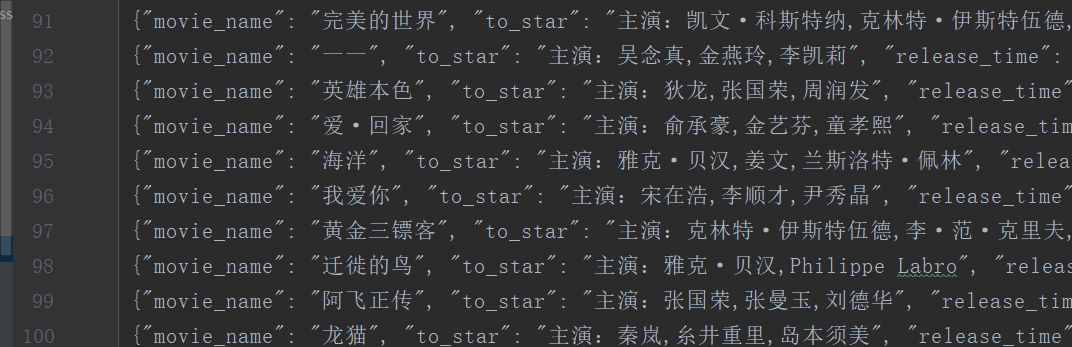

文本结果:

- openvpn linux客户端使用

52076

- H3C基本命令大全

51954

- openvpn windows客户端使用

42175

- H3C IRF原理及 配置

39017

- Python exit()函数

33521

- openvpn mac客户端使用

30476

- python全系列官方中文文档

29106

- python 获取网卡实时流量

24143

- 1.常用turtle功能函数

24045

- python 获取Linux和Windows硬件信息

22390

- LangChain1.0-Agent-部署/上线(开发人员必备)

158°

- LangChain1.0-Agent-Spider实战(爬虫函数替代API接口)

186°

- LangChain1.0-Agent(进阶)本地模型+Playwright实现网页自动化操作

194°

- LangChain1.0-Agent记忆管理

175°

- LangChain1.0-Agent接入自定义工具与React循环

194°

- LangChain1.0-Agent开发流程

184°

- LangChain1.0调用vllm本地部署qwen模型

212°

- LangChain-1.0入门实践-搭建流式响应的多轮问答机器人

214°

- LangChain-1.0入门实战-1

220°

- LangChain-1.0教程-(介绍,模型接入)

225°

- 姓名:Run

- 职业:谜

- 邮箱:383697894@qq.com

- 定位:上海 · 松江