Python3 完整实现DNN

发布时间:2019-09-18 07:23:02编辑:auto阅读(2825)

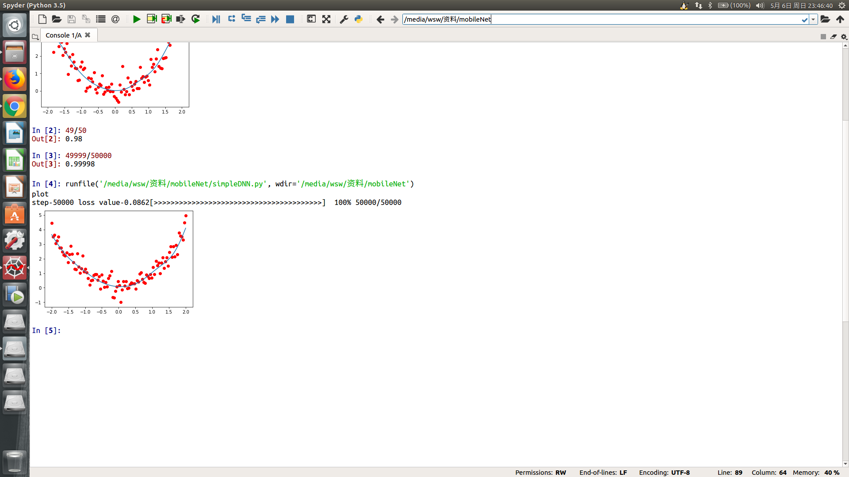

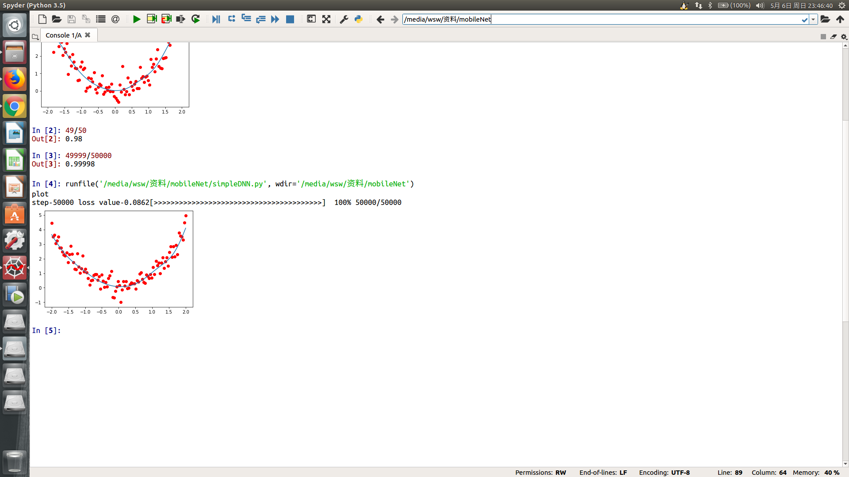

完整实现DNN,包括前向传播和反向传播。实现一个2次函数的拟合。

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

"""

Created on Sun May 6 16:11:40 2018

@author: wsw

"""

# construct simple DNN

import numpy as np

import matplotlib.pyplot as plt

import sys

def generate_data():

x = np.linspace(-2,2,100)[np.newaxis,:]

noise = np.random.normal(0.0,0.5,size=(1,100))

y = x**2+noise

return x,y

class DNN():

def __init__(self,input_nodes=1,hidden1_nodes=4,hidden2_nodes=4,output_nodes=1):

self.input_nodes = input_nodes

self.hidden1_nodes = hidden1_nodes

self.hidden2_nodes = hidden2_nodes

self.output_nodes = output_nodes

self.build_DNN()

def build_DNN(self):

np.random.seed(1)

# Layer1 parameter

self.w1 = np.random.normal(0.0,0.1,size=(self.hidden1_nodes,self.input_nodes))

self.b1 = np.zeros(shape=(self.hidden1_nodes,1))

# Layer2 parameter

self.w2 = np.random.normal(0.0,0.2,size=(self.hidden2_nodes,self.hidden1_nodes))

self.b2 = np.ones(shape=(self.hidden2_nodes,1))

# Layer3 parameter

self.w3 = np.random.normal(0.0,0.5,size=(self.output_nodes,self.hidden2_nodes))

self.b3 = np.zeros(shape=(self.output_nodes,1))

def forwardPropagation(self,inputs):

self.z1 = np.matmul(self.w1,inputs) + self.b1

self.a1 = 1/(1+np.exp(-self.z1))

self.z2 = np.matmul(self.w2,self.a1) + self.b2

self.a2 = 1/(1+np.exp(-self.z2))

self.z3 = np.matmul(self.w3,self.a2) + self.b3

self.a3 = self.z3

def backwardPropagation(self,da,a,a_1,w,b,last=False):

'''

da:current layer activation output partial devirate result

a:current layer activation output

a_1:previous layer of current layer activation output

w:current parameter

b:current bias

'''

# dz = da/dz

if last:

dz = da

else:

dz = a*(1-a)*da

# dw = dz/dw

nums = da.shape[1]

dw = np.matmul(dz,a_1.T)/nums

db = np.mean(dz,axis=1,keepdims=True)

# da_1 = dz/da_1

da_1 = np.matmul(w.T,dz)

w -= 0.5*dw

b -= 0.5*db

return da_1

def train(self,x,y,max_iter=50000):

for i in range(max_iter):

self.forwardPropagation(x)

#print(self.a3)

loss = 0.5*np.mean((self.a3-y)**2)

da = self.a3-y

da_2 = self.backwardPropagation(da,self.a3,self.a2,self.w3,self.b3,True)

da_1 = self.backwardPropagation(da_2,self.a2,self.a1,self.w2,self.b2)

da_0 = self.backwardPropagation(da_1,self.a1,x,self.w1,self.b1)

self.view_bar(i+1,max_iter,loss)

return self.a3

def view_bar(self,step,total,loss):

rate = step/total

rate_num = int(rate*40)

r = '\rstep-%d loss value-%.4f[%s%s]\t%d%% %d/%d'%(step,loss,'>'*rate_num,'-'*(40-rate_num),

int(rate*100),step,total)

sys.stdout.write(r)

sys.stdout.flush()

if __name__ == '__main__':

x,y = generate_data()

plt.scatter(x,y,c='r')

plt.ion()

print('plot')

dnn = DNN()

predict = dnn.train(x,y)

plt.plot(x.flatten(),predict.flatten(),'-')

plt.show()

上一篇: python--文件操作删除某行

下一篇: Python实现简单的HTTP服务器(支

- openvpn linux客户端使用

52145

- H3C基本命令大全

52037

- openvpn windows客户端使用

42234

- H3C IRF原理及 配置

39078

- Python exit()函数

33591

- openvpn mac客户端使用

30544

- python全系列官方中文文档

29192

- python 获取网卡实时流量

24202

- 1.常用turtle功能函数

24103

- python 获取Linux和Windows硬件信息

22439

- LangChain1.0-Agent-部署/上线(开发人员必备)

237°

- LangChain1.0-Agent-Spider实战(爬虫函数替代API接口)

280°

- LangChain1.0-Agent(进阶)本地模型+Playwright实现网页自动化操作

283°

- LangChain1.0-Agent记忆管理

265°

- LangChain1.0-Agent接入自定义工具与React循环

299°

- LangChain1.0-Agent开发流程

272°

- LangChain1.0调用vllm本地部署qwen模型

298°

- LangChain-1.0入门实践-搭建流式响应的多轮问答机器人

300°

- LangChain-1.0入门实战-1

302°

- LangChain-1.0教程-(介绍,模型接入)

322°

- 姓名:Run

- 职业:谜

- 邮箱:383697894@qq.com

- 定位:上海 · 松江