python spark windows

发布时间:2019-08-31 09:43:33编辑:auto阅读(2093)

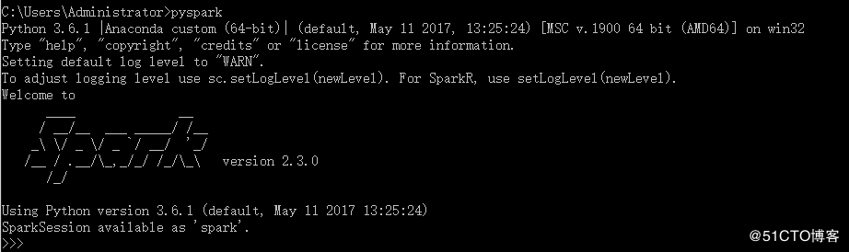

- 并将 %SPARK_HOME%/bin 添加至环境变量PATH。

- 然后进入命令行,输入pyspark命令。若成功执行。则成功设置环境变量

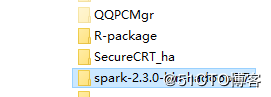

1、下载如下

放在D盘

添加 SPARK_HOME = D:\spark-2.3.0-bin-hadoop2.7。

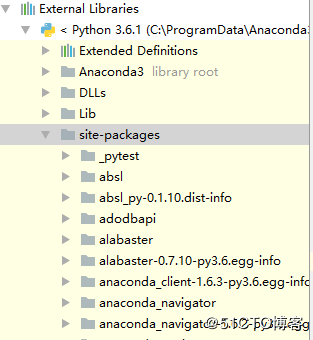

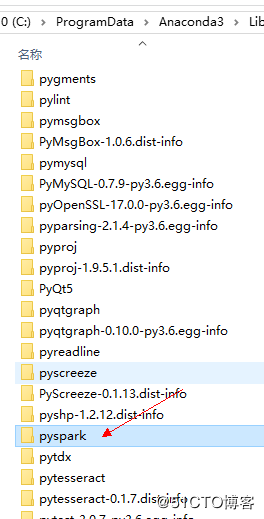

找到pycharm sitepackage目录

右键点击即可进入目录,将上面D:\spark-2.3.0-bin-hadoop2.7里面有个/python/pyspark目录拷贝到上面的 sitepackage目录

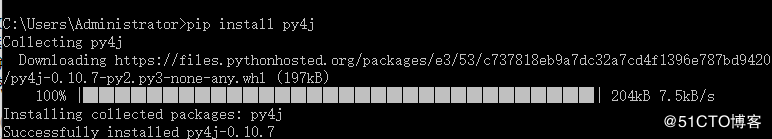

安装 py4j

试验如下代码:

from __future__ import print_function

import sys

from operator import add

import os

# Path for spark source folder

os.environ['SPARK_HOME'] = "D:\spark-2.3.0-bin-hadoop2.7"

# Append pyspark to Python Path

sys.path.append("D:\spark-2.3.0-bin-hadoop2.7\python")

sys.path.append("D:\spark-2.3.0-bin-hadoop2.7\python\lib\py4j-0.9-src.zip")

from pyspark import SparkContext

from pyspark import SparkConf

if __name__ == '__main__':

inputFile = "D:\Harry.txt"

outputFile = "D:\Harry1.txt"

sc = SparkContext()

text_file = sc.textFile(inputFile)

counts = text_file.flatMap(lambda line: line.split(' ')).map(lambda word: (word, 1)).reduceByKey(lambda a, b: a + b)

counts.saveAsTextFile(outputFile)计算成功即可

上一篇: k3cloud新建简单帐表教程

下一篇: python关于conda创建新环境

- openvpn linux客户端使用

52199

- H3C基本命令大全

52106

- openvpn windows客户端使用

42274

- H3C IRF原理及 配置

39141

- Python exit()函数

33644

- openvpn mac客户端使用

30601

- python全系列官方中文文档

29279

- python 获取网卡实时流量

24260

- 1.常用turtle功能函数

24150

- python 获取Linux和Windows硬件信息

22518

- LangChain 1.0-Agent中间件-实现闭环(批准-编辑-拒绝动作)

74°

- LangChain 1.0-Agent中间件-汇总消息

81°

- LangChain 1.0-Agent中间件-删除消息

86°

- LangChain 1.0-Agent中间件-消息压缩

78°

- LangChain 1.0-Agent中间件-多模型动态选择

145°

- LangChain1.0-Agent-部署/上线(开发人员必备)

313°

- LangChain1.0-Agent-Spider实战(爬虫函数替代API接口)

358°

- LangChain1.0-Agent(进阶)本地模型+Playwright实现网页自动化操作

355°

- LangChain1.0-Agent记忆管理

332°

- LangChain1.0-Agent接入自定义工具与React循环

373°

- 姓名:Run

- 职业:谜

- 邮箱:383697894@qq.com

- 定位:上海 · 松江