Python简单爬虫

发布时间:2019-09-23 17:03:07编辑:auto阅读(1906)

爬取链家二手房源信息

import requests

import re

from bs4 import BeautifulSoup

import csv

url = ['https://cq.lianjia.com/ershoufang/']

for i in range(2,101):

url.append('https://cq.lianjia.com/ershoufang/pg%s/'%(str(i)))

# 模拟谷歌浏览器

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.100 Safari/537.36'}

for u in url:

r = requests.get(u,headers=headers)

soup = BeautifulSoup(r.text,'lxml').find_all('li', class_='clear LOGCLICKDATA')

for i in soup:

ns = i.select('div[class="positionInfo"]')[0].get_text()

region = ns.split('-')[1].replace(' ','').encode('gbk')

rem = ns.split('-')[0].replace(' ','').encode('gbk')

ns = i.select('div[class="houseInfo"]')[0].get_text()

xiaoqu_name = ns.split('|')[0].replace(' ','').encode('gbk')

huxing = ns.split('|')[1].replace(' ','').encode('gbk')

pingfang = ns.split('|')[2].replace(' ','').encode('gbk')

chaoxiang = ns.split('|')[3].replace(' ','').encode('gbk')

zhuangxiu = ns.split('|')[4].replace(' ','').encode('gbk')

danjia = re.findall("\d+",i.select('div[class="unitPrice"]')[0].string)[0]

zongjia = i.select('div[class="totalPrice"]')[0].get_text().encode('gbk')

out=open("/data/data.csv",'a')

csv_write=csv.writer(out)

data = [region,xiaoqu_name,rem,huxing,pingfang,chaoxiang,zhuangxiu,danjia,zongjia]

csv_write.writerow(data)

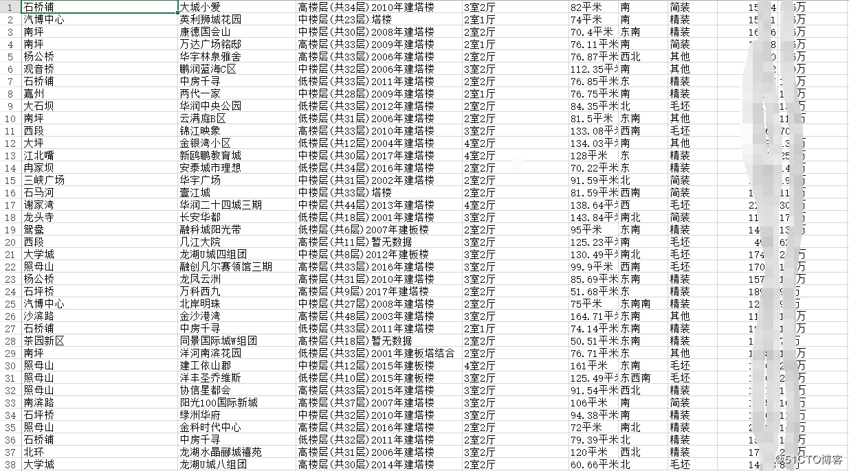

out.close()数据结果

上一篇: saltstack python api

下一篇: 你必须知道的python运维常用脚本!(

- openvpn linux客户端使用

50398

- H3C基本命令大全

49686

- openvpn windows客户端使用

40297

- H3C IRF原理及 配置

37335

- Python exit()函数

31740

- openvpn mac客户端使用

28599

- python全系列官方中文文档

27534

- 1.常用turtle功能函数

22318

- python 获取网卡实时流量

22311

- python 获取Linux和Windows硬件信息

20623

- Python搭建一个RAG系统(分片/检索/召回/重排序/生成)

370°

- Browser-use:智能浏览器自动化(Web-Agent)

977°

- 使用 LangChain 实现本地 Agent

768°

- 使用 LangChain 构建本地 RAG 应用

725°

- 使用LLaMA-Factory微调大模型的function calling能力

967°

- 复现一个简单Agent系统

845°

- LLaMA Factory-Lora微调实现声控语音多轮问答对话-1

1462°

- LLaMA Factory微调后的模型合并导出和部署-4

2744°

- LLaMA Factory微调模型的各种参数怎么设置-3

2477°

- LLaMA Factory构建高质量数据集-2

1903°

- 姓名:Run

- 职业:谜

- 邮箱:383697894@qq.com

- 定位:上海 · 松江